The Inner Workings of a Blaston Bot

Hi! My name is Simon Cutajar, and I’m one of the game programmers at Resolution Games. I’ve spent the last year using Unity and C# to work on different aspects of Blaston. Today, I thought I’d delve into some of the problems that needed to be solved when writing virtual reality AI bots for Blaston.

The bot code was initially written by Mike Booth, best known for his work on the bots in Counter-Strike as well as the AI Director in Left 4 Dead. After the game’s release on October 8, 2020, I took over the code base and kept it up-to-date, particularly when new weapons were introduced.

Anatomy of a Blaston Bot

So how does one go about creating an AI agent that can play Blaston effectively?

First, we need to discuss what a Blaston bot is made up of. When a player spawns into the arena to duel, their avatar is made up of a head, a torso, a left hand, and a right hand. Just as a player can fire a gun while dodging incoming bullets and looking at a weapon that has just spawned, so too must a bot be able to do the same things at the same time.

We achieve this by having each part of the body act somewhat independently. Hands focus on picking up and using weapons, the head focuses on looking at incoming projectiles, and the torso focuses on dodging projectiles that the head has seen. By delegating specific actions to specific body parts, we can have them act at the same time in a similar way to human players.

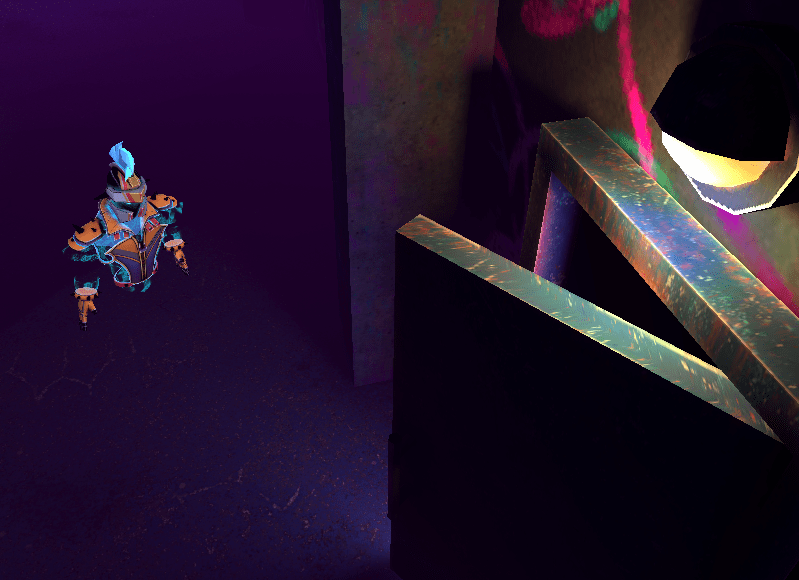

Warning: Nova detected

Each independent body part then keeps track of its current state, which is determined by the current behaviour it is performing. For example, a hand cannot pick up a weapon if it is currently firing a gun, and once the gun is empty, it should drop it before attempting to pick up a new weapon.

In the code, this is modelled by using a finite state machine. The image below shows an example of the possible states that a hand in a Blaston bot can be in, as well as the change in state when an action occurs.

State machine diagram for a bot's hand in Blaston

For example, if a Viper spawned within grabbing distance, the hand closest to the weapon would change state to PickUpGun and move towards the Viper until it was close enough to grab it. Then, it would move to the ShootGun state, which would move the gun to an appropriate position, aim it at the opposing player, and fire. Finally, once the gun is out of ammo, the hand would choose whether it should drop the tool (in the DropTool state) or throw it at the opposing player (in the ThrowEmptyTool state).

Of course, certain restrictions are added to try and make the bots act in a somewhat believable manner: hands don’t just shoot off to a weapon that has just spawned since arms aren’t infinitely long, and the bot won’t know if something has spawned behind it since it doesn’t have eyes at the back of its head. These parameters can be configured by designers to create different bots.

Agents playing Blaston

At its core, Blaston is a VR game where you duel another player from a distance. These duels are performed using a variety of different weapons, each with their own specific abilities and uses. Players are expected to use these tools to overcome their opponent while dodging any opposing projectiles. They’re also restricted to a relatively small podium space; players can’t just run off at the first sign of danger.

This means that the two core aspects of Blaston: using weapons, and dodging incoming projectiles, are both things that the bot is expected to do well.

At a high level, the game is made up of a system of messages. When players move to a new position, pick up weapons, and where projectiles travel in space, all of these are individual messages that are generated and processed by internal systems. In order for the bots to even work at all, they need to be able to send and receive messages to and from these systems.

Firing Weapons

Let’s tackle using weapons first.

The bot is expected to know how to use the majority of weapons effectively, meaning that it should know how to throw grenades, defend itself using shields, and use offensive weapons. In order to do so, the bot has to first recognise that one of its weapons has spawned around it. Once this happens, if a hand is free, it should move towards it and attempt to pick the newly spawned weapon. Finally, depending on the type of weapon, the bot should use it effectively.

If the bot has picked up an offensive weapon such as the Viper or the Lance, then the bot can simply aim at the player and fire, making sure to fire again once the weapon has successfully reloaded. However, if the bot has picked up a grenade, it needs to know how to throw it. This is done by moving its hand backwards and then forwards quickly, and releasing the grenade to launch it at a particular target. If the bot has instead picked up a shield (such as the Aegis Shield or the Cortex Shield), it should aim it at incoming projectiles, while if it has picked up a Bunker Barrier, it should aim it at an empty barrier slot and trigger it.

Finally, once the weapon is spent, the bot should also get rid of the empty weapon in order to free up a hand and pick up a new weapon. It can do this either by throwing the weapon at its opponent (using a similar action to throwing a grenade), or it could simply drop the weapon.

Dodging Projectiles

Next, we’ll discuss dodging incoming projectiles. As mentioned before, in order to keep things believable, a bot can only dodge a projectile it has already seen.

Predicted positions in time of the incoming Hammer projectiles.

Once the bot has seen a projectile, it then predicts where the projectile will travel within the next couple of seconds in order to determine a safe spot on the podium for it to move. This is done by considering the total volume the bot can move (a cylinder the size of the podium) and breaking it into a 3D grid. The amount of seconds it uses for its predictions is carefully balanced so that it can dodge effectively without having it consume too much computing resources.

Using a breadth first search, we can search through a 4D space (3D grid + time) in order to determine the safest place on the podium to be. This is illustrated below using wireframe spheres in different colours that showcase how dangerous each area is. Dangerous sections of this grid are marked in red or orange, while safe sections are marked in green.

Note the incoming Enercage.

The playspace divided into a 3D grid. Green spheres are safe spaces, while red spheres are dangerous spaces.

Projectiles are deemed dangerous based on how much damage they can do. Bots will always attempt to minimise the amount of damage they take , meaning that if a bot is trapped and has a choice between taking 30 damage from a Nova and 6 damage from a Wildcat, it will walk into the weaker projectile in order to reduce the amount of damage it takes. For particularly fast weapons such as the Viper or Viper Ellipse, the bot will move to the back of the platform when you pick it up, giving it time to perform enough dodging calculations and get out of the way.

One way to save processing power is to avoid dodging projectiles that we know won’t hit the bot. One such example is if the bot has previously placed a barrier. In order to check that a projectile will collide with a placed barrier, we perform a ray cast: draw a straight line in the direction the projectile is travelling, and check to see if that line intersects with the barrier. For projectiles that don’t move in straight lines (such as projectiles from Ellipse weapons, the Nova Helix, or thrown grenades), the curved trajectory is broken up into a series of straight ray casts that estimate it. Once we notice that a projectile will collide with a barrier, we don’t need to include it in the bot’s dodging calculations any more, saving time and processing power.

Instilling Emotion

The final step to make bots feel more lifelike is to have them play back emotes before and after a match. These emotes are prerecorded and cleaned up in the game engine, and categorised depending on if they’re used for introductions, victory poses, or defeated poses. Finally, a designer assigns emotes to different bots in order to give them different personalities.

We hope you enjoyed this quick high level overview on how the bots in Blaston work! If you also like writing AI code we’re hiring for our ML/AI team, as well as for many other programming roles.

Simon Cutajar is a Maltese game programmer currently based in Sweden, and has worked as a programmer for 4 years. He has a PhD from The Open University, UK, where he focused on using machine learning to generate music for video games.