Behind the Scenes of Spatial Ops: Building a Seamless MR Experience

Have you ever wondered how a MR game that seamlessly blends the physical and virtual spaces is created? We sat down with Spatial Ops’ Creative Lead Niklas and Code Lead Dhimas to dive into this topic.

General Development Process

Starting Off: What was the initial vision behind Spatial Ops, and how did it evolve throughout development?

The initial vision for Spatial Ops was that of a simple one-room shooter which evolved to a full-blown laser tag at-home experience with an ambitious map editor that allows people to map out any physical space and play anywhere.

We also decided to add an entire new single-player campaign experience akin to Time Crisis on steroids to enable people with limited space to enjoy the fast-paced arcade shooter known today as Spatial Ops.

Unique Challenges: What were the biggest challenges you faced in creating a game that blends physical and virtual spaces seamlessly?

There were many technical challenges that needed to be overcome when blending the physical and virtual space. How do you make sure players are correctly positioned relative to each other and the physical space? How do we maintain integrity in syncing players through extended play sessions? How can we allow people to play in small spaces and huge ones up to 5,000 square feet and still have an enjoyable experience? How do we encourage people into moving and adapt the weapons to allow for a simple and seamless pickup-and-play experience that requires zero former experience? All of these and many more questions had to be answered and resolved and we believe the final product speaks for itself.

Co-Location in Spatial Ops

Concept of Co-location: For those unfamiliar, can you explain what co-location means in Spatial Ops and why it’s a critical feature?

Co-location means being able to be part of the same physical space in the game, allowing for interactions between players and the virtual world.

Technical Challenges: What were the main technical hurdles in ensuring multiple players could share the same physical space without latency issues or misalignment?

We had to address edge cases with Meta's shared anchors, informing players about potential alignment issues and providing clear instructions on how to proceed if problems arose.

Optimizing data packets sent from the client to host during gameplay was also crucial. VR headset input is inherently noisy, unlike console or PC input, which typically consists of simple binary values (pressed or not pressed). VR input includes a wider range of data, such as hand and head tracking, finger positions, and more. The Fusion Stats Debug tool proved invaluable in diagnosing data traffic issues.

Photon Fusion stat

Design Innovations: Were there any specific tools or systems you created to test and iterate on co-location during development?

We primarily utilized the standard Unity tools provided. The ability to playtest within the Unity Editor, eliminating the need to wear a Meta Quest headset for every test iteration was helpful. It didn't fully replace headset testing, especially for features reliant on Meta's shared anchor API. However, the ability to test core gameplay mechanics within the editor still significantly improved our overall development velocity.

Editor view

Tools to Speed Up Campaign Level Design

The Need for Speed: Why was it important to develop tools to accelerate campaign level design? Were there bottlenecks you were looking to solve?

Almost all of the levels require finetuning and timing to be executed with the precision we require to give the players an amazing, fast-paced, and coordinated experience. As the levels are handcrafted, we needed some great tools to achieve this in the timeframe allowed. With a small team and many designs to be fed, we needed to make some tough decisions about what things we needed to prioritize and what things had to take a back seat.

Tool Overview: Can you walk us through the tools you built to streamline the creation of campaign levels?

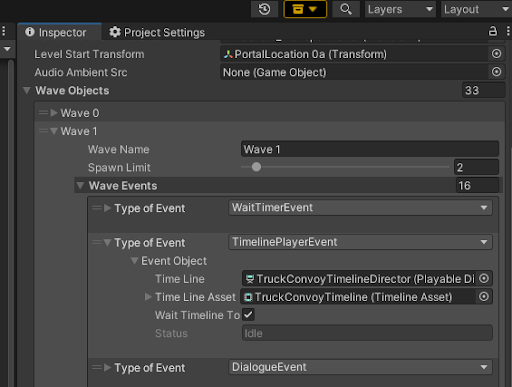

For campaign level creation, we developed two primary tools. The first, our Wave Container, manages the sequenced execution of events within each level. For managing level events, we created the Wave Container. This tool allows designers to sequence predefined "wave events"—C# classes containing encapsulated start and end logic. Each event manages its own execution, signaling when it has finished so the Wave Container can trigger the next event in the sequence. Designers can select these events from a custom dropdown, input any necessary parameters, and arrange them in the desired order.

Wave Container inspector view

Our second tool is a Bot State State Machine built using Unity's Animator. After evaluating various asset store options, we found them either unoptimized or overly complex. Unity's Animator proved to be a surprisingly effective solution. It offers excellent performance, is easy to debug, and has a low learning curve for new team members. While complex bot behaviors require more rules within the Animator, the ability to use layers, sub-state machines, and switch animators with minimal performance overhead made it the ideal choice for our needs.

Bot state machine designer (built on top of Unity Animator Tool)

Level Design Iteration: How do these tools help your designers quickly prototype, test, and iterate on levels?

Our Wave Container and Bot State Machine tools significantly accelerate level design iteration. The Wave Container's drag-and-drop interface and custom event dropdown allow designers to quickly assemble and reconfigure level sequences without requiring code changes. They can easily experiment with different enemy combinations, event timings, and environmental triggers.

The Bot State Machine, leveraging Unity's Animator, enables rapid prototyping and tweaking of bot behaviors. Designers can adjust bot AI, movement patterns, and reactions within a visual interface, allowing for quick testing and refinement of enemy encounters and level challenges.

For our designers, the tools are invaluable as they can make or break the design and vision. Without spending time on the creation of proper tools, we would not have been able to deliver some of the magic moments that the game has managed to capture in our audience.

Real-World Interaction: How did designing campaign levels account for real-world environments and player movement in physical spaces?

Designing campaign levels for real-world interaction required establishing clear guidelines for physical space and player movement. We defined standard dimensions for key elements like hallways and rooms to ensure consistent bot navigation and to prevent them from wandering too far. Verticality was also a crucial consideration. We determined appropriate story heights to allow bots to effectively engage players positioned within portal cages, ensuring that bots could shoot down at players and that players could visually track the bots within their limited movement space.

Portal cage placement was carefully planned, not only for bot navigation but also to prevent visual clipping between virtual objects and the real-world passthrough view. This attention to detail ensured a seamless and immersive experience for players interacting with both the virtual and physical environments.

Adaptive Bots for Campaign and Arena Modes

The Role of Bots: What role do bots play in Spatial Ops, and how do they enhance the player experience in both Campaign and Arena modes?

Bots are integral to the Spatial Ops experience, enhancing gameplay in both Campaign and Arena modes.

In Campaign, bots serve a dual purpose. They provide combat challenges, of course, but they also contribute to the narrative and establish the atmosphere of certain levels. For example, in the initial lab rat encounter, the bots are strategically placed near the lab rat gate to subtly convey the agents' lack of preparedness, enriching the storytelling.

In Arena mode, bots are crucial for solo players. Because co-located multiplayer requires at least two participants, bots offer a compelling alternative for those playing alone. They can act as opponents, providing a challenging AI to test skills against, or as allies, allowing players to team up and tackle the Arena challenges together. This ensures that players can always enjoy the Arena mode, regardless of whether they have a second player available.

First test on Bot walking and navigating through multiple floor and walking on stairs or slope.

Adaptability: How did you design bots to adapt seamlessly to both structured Campaign levels and the more dynamic Arena environments?

Our bot design prioritized adaptability to diverse environments, a key challenge shared by both Campaign and Arena modes. In Campaign, we had multiple designers creating levels with varied layouts, requiring bots to seamlessly navigate, find targets, and act appropriately in any environment. The dynamic nature of Arena, where players exhibit even greater creativity in map design, presented a similar challenge. We couldn't provide pre-set instructions for every possible Arena configuration. Instead, we engineered our bots to dynamically analyze their surroundings and make informed decisions.

We leveraged Unity's NavMesh and extensive use of raycasting for runtime environment analysis. To mitigate the performance cost of these raycast checks, we implemented the Unity Jobs System for parallel processing, significantly optimizing performance.

Campaign Level Navmesh

A key challenge was teaching bots to identify suitable cover. Our solution involves a multi-step process. When a bot seeks cover, it samples points around its current location in a clockwise fashion. From each point, it performs a raycast towards its target. If the raycast is obstructed by an object, that object is added to a collection of potential cover locations. The bot then evaluates these potential cover points, selecting the best option based on criteria such as distance to the target, distance to the bot itself, and the size of the cover object. This dynamic cover selection process allows bots to intelligently adapt to a wide range of level designs and player-created Arena maps.

Testing Adaptability: How did you ensure bots perform consistently across different player setups, levels, and real-world spaces?

We ensured consistent bot performance across diverse player setups, levels, and real-world spaces by using a unified logic core. While bot behavior varies between game modes and bot types through parameter adjustments and different state flows, the underlying decision-making process remains consistent. This foundational consistency was key.

Our testing process was rigorous. We extensively tested bots within a dedicated test scene containing a variety of obstacles: barriers, crates, slopes, stairs, and walls. We observed bot behavior in combat simulations and general navigation scenarios.

Debugging tools, like debug logs and visualized raycasts, were invaluable. We meticulously monitored bot states, particularly the environment checks that drive their decision-making. Accurate environment analysis is crucial; a failed check can lead to incorrect state transitions and undesirable behaviors, such as bots shooting at walls, teammates, or becoming stuck. By carefully observing these checks, we could quickly identify and address any anomalies.

Visualizing raycast for debugging

Closing Thoughts

Key Learnings: What were the most valuable lessons you learned while creating Spatial Ops?

The most valuable lesson we learned while creating Spatial Ops was the critical importance of the user experience. Meticulously addressing edge cases to ensure players don't become lost or confused proved absolutely essential.

Player Feedback: Has any early feedback from players surprised or influenced how you think about the game moving forward?

The most surprising and influential feedback we received was the enthusiasm players showed for the map editor. We were genuinely surprised by how much people enjoyed creating their own arenas. This positive response has definitely shaped our thinking about future development and potential expansions of the creative tools.

Other than that, we have seen a rising trend of players wanting to play together against the bots which is key to our next step in the evolution of the game. We have some amazing things waiting in the pipeline and we strongly believe our players will have an even greater time experiencing the things planned for them!

Spatial Ops is available on the Meta Horizon Store and Pico (Campaign Edition).